This morning, as my tablet’s virtual assistant replied to my “good morning” and turned on the BBC at my request, I started wondering: if I heard that voice call out from the middle of a crowd, would I recognize it? Could I sing alongside it in a duet with two-part harmony?

Or is it just another cheeky chatbot that’s getting wittier by the day—but hindered by an impersonal sound?

Conversational agents are software programs capable of understanding natural language and responding to human speech. And they present a huge opportunity for digital marketing strategists to define their brands’ voices in a new, almost four-dimensional way. After all, these agents exist both as intelligent assistants and as forms of communication within apps—both of which are major opportunities for audiences to get a sense of how brands look, feel, and sound.

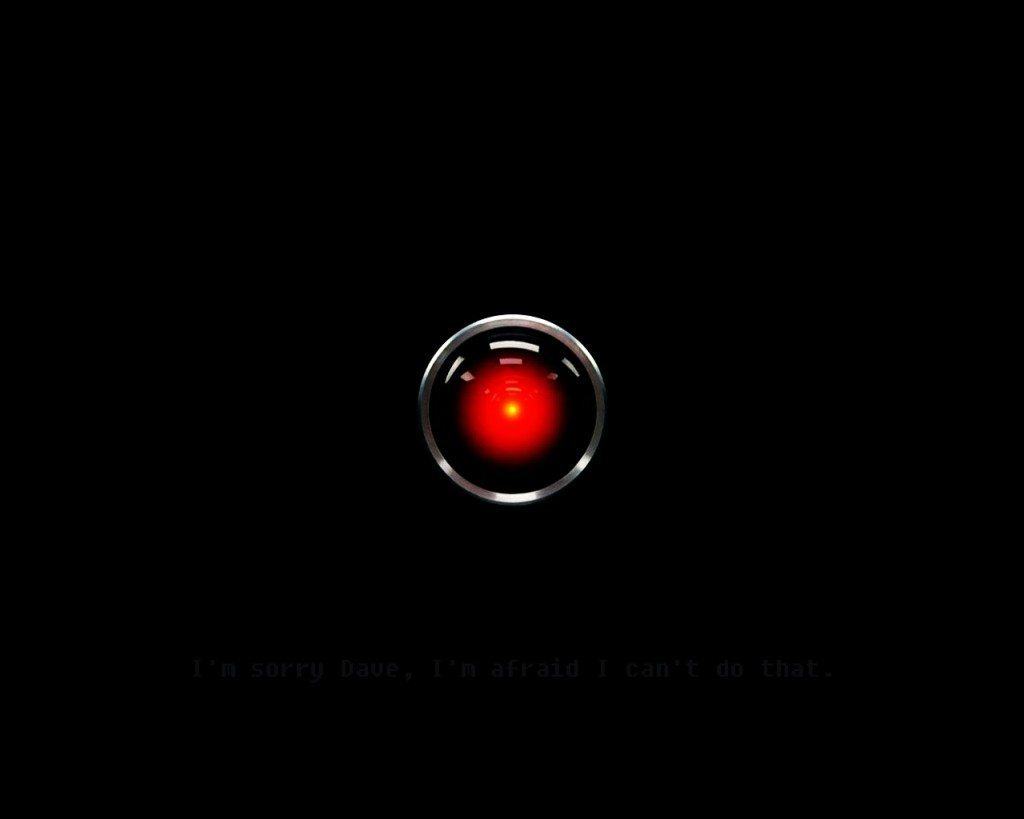

An actual, audible brand voice might sound like a far-off future: after all, while today’s conversational agents no longer speak with the generic, robotic voice of HAL 9000 from 2001: A Space Odyssey, they still sound noticeably unnatural. And, if anything, brands will want their conversational agents (as with their chatbots, stories, and other media) to seem more human, not less. But recent developments in this technology indicate that we might actually not be far from a marketing transformation, and a future in which computer voices are indistinguishable from the voices of humans—because they’re developing personalities.

Here’s a closer look at the three dimensions of conversational agents today, and what a four-dimensional future might mean for brands and users alike.

Dimension 1: Utility

As they become better conversationalists, machines inch ever closer to what Japanese roboticist Masahiro Mori called the “uncanny valley”. As explained in IEEE Robotics and Animation Magazine, Mori coined the term back in the 1970s as a way to describe his hypothesis that “a person’s response to a humanlike robot would abruptly shift from empathy to revulsion as it approached, but failed to attain, a lifelike appearance.”

Today, nearly 50 years later, designers of human–computer interaction are still struggling to cross the uncanny valley and reach a place where humans interact comfortably with their robotic counterparts. More and more people are getting comfortable with the idea of using technology to enhance their lives, but there’s still that hesitation when things hit just too close to home.

That challenge hasn’t scared any brands off, though—in fact, Google, Facebook, Amazon, and many other major players are all currently working to develop and humanize products that are both intelligent and useful.

Amazon’s Alexa, one of the younger siblings in the intelligent assistant family, has already generated some clout among consumers. After all, it’s easy to use—and it’s cool: just saying Alexa’s name to the Amazon Echo opens up a world of possibility, allowing users to find restaurants, summon transportation, and more. But it isn’t mere utility that makes the Echo unique—it’s Alexa’s voice. As Nellie Bowles, technology reporter for The Guardian, wrote: “it might not be the Echo’s good tech that’s winning over people. It might be Alexa herself—patient, present, listening.”

At present, Alexa is incredibly useful—and she gets more helpful all the time. Amazon has been building successful third-party partnerships with other brands, and it’s improving the capabilities of the virtual assistant with lots of new apps, which the company calls “skills.” Alexa currently has more than 3,000 skills, including Uber, Spotify, Twitter and Yahoo Sports Fantasy Football. And as the company continues to augment Alexa’s skill set and capabilities, the software will develop and become increasingly capable. That’s one of the best things about these technologies: brands have the power to shape them, to make them more mature through their service offerings; to help them grow up.

But how does that maturity affect voice personality? And what’s the process behind creating a robotic character that brands can fine-tune to fit their needs? To learn more, I consulted with Michael Picheny, senior manager at the Watson Multimodal Lab for IBM Watson.

Dimension 2: Voice Personality

When I talked with Picheny, my first question for him was this: what was the Watson Multimodal Lab team looking for in the human voice they used to build the Watson sound?

“We were looking for a voice that was slow, steady, and most importantly, ‘pleasant,’” he said. “We wound up choosing a voice that sounded optimistic and energetic. We interviewed 25 voice actors, looking for the right sound. We even experimented with the voices in various ways.”

Of course, “pleasant” is a subjective term—the Watson team knows that better than anyone, which is why they insist on giving users the chance to play with their own styles. “For example,” said Picheny “in the Watson Text to Speech API, we recently added the capability to mark up the text to be spoken with inflections expressing ‘apology,’ ‘uncertainty,’ and ‘good news.’ In addition, Watson TTS now includes a voice transformation feature that can adjust timbre, phonation, breathiness, tone and speech rate. Our work in speech technology aims to help developers synthesize voices in technology—such as call center responders or robots—to interact more naturally with humans.”

Marketers who use Watson often find themselves surprised not only by the system’s flexibility to match their applications’ styles, but also by its ability to express emotion. I learned, as we spoke, that Picheny has a deep passion for opera, so I knew he’d understand when I asked about Watson’s expressive capabilities on a vocal level. Would it be possible to sculpt a robotic voice that complements and accompanies the nuances of a brand’s voice? “We have found that creating a voice is as much of an art as it is a science,” he told me. “Our research is focused on giving more and more control to the voice user interface designer using a simple markup language applied to the text.”

So, how does that all come together? This video about cognitive solutions for businesses, which features IBM’s Watson in a robot body with its own voice configuration, should give you an idea.

Dimension 3: The User–Robot Relationship

A brand’s voice is determined, in large measure, by the language it uses, the things it talks about, and the people it’s speaking to—but it’s almost always visual or written content. It’s very rare that a brand’s voice is embodied in an audible way—and even rarer that consumers get to develop one-on-one relationships with that brand. For that reason, these human-like robotic voices are the beginning of a digital marketing transformation, opening doors for brands to craft their voices in an almost four-dimensional capacity.

For Picheny, this isn’t as far-fetched an idea as it might sound. “Through careful selection of voice talent, it is always possible to get a voice that represents your brand,” he said. “The important thing is to test drive the voice on a representative cross section of decision makers, as the perception of a voice is very subjective.”

The key for brands, then, lies in research. If marketers can learn more about their audiences, then pull in the work of linguists and engineers in understanding user experience on a vocal level, they’ll come up with distinct brand personalities that, in time, could—almost—take on lives of their own.

One morning, in the not-too-distant future, I’ll wake up and say “good morning” to my virtual assistant. Its voice will have changed, but it will somehow sound right—perhaps more human—for that precise point in time. At that moment, we’ll have achieved the fourth dimension: a level of customization that readily adapts to me over time.