Back in 2008, Yair Lavi founded Tonara, an interactive app that “listens to” musicians and assists them as they play. He has transported the lessons learned there to his latest venture,  3DSignals, where the co-founder and head of algorithms uses ultrasonic sensors and deep-learning software to detect anomalies in machine sounds. “Industrial music” you might say.

3DSignals, where the co-founder and head of algorithms uses ultrasonic sensors and deep-learning software to detect anomalies in machine sounds. “Industrial music” you might say.

Sound interesting? Take a look…

Smart Industry: How do you define “deep learning”?

Yair: Deep learning is a method of artificial intelligence used to detect patterns in data, either independently or based on some type of training. In general, deep-learning applications are built around artificial-neural networks that loosely resemble what we know about the human brain’s high-level operations. During the last few years, the performance of deep-learning algorithms improved significantly due to the availability of high computational power and large amounts of training data. Most worldwide deep-learning research and applications focus on computer vision and analyze images, while most acoustic related research and applications are limited to speech recognition.

Smart Industry: What is unique about your approach to ultrasonic monitoring / predictive maintenance?

Yair: 3DSignals’ algorithms team is pushing the space forward by specializing in acoustic deep-learning applications in general, not just speech. In addition, we provide algorithms based on physical modes of machines, which brings value to our customers from day one, as they don’t require an extensive learning phase. We’re the first and currently only company to use air-borne, ultrasonic, industrial-grade microphones for predictive maintenance. We provide an end-to-end solution comprised of hardware (sensors and edge computers), the software and cloud solution, along with the algorithmic solution (deep-learning based and physical-modeling based). We upload data collected by our own sensors and other existing sensors, should the client wish to connect them as well, and have the data and its analysis available from all web-connected devices (laptops, tablets and mobile phones).

Smart Industry: Why the new approach?

Yair: When you look at what’s currently available to monitor industrial machines, passive ultrasonic gear is limited to handheld devices with headsets that engineers or technicians need to carry with them from machine to machine. Monitoring requires contact with the machine, which isn’t always feasible. The output of these systems is not the same as the natural sound you hear, and it requires training to understand the signals and log the events. We take a different approach, and our sensors are present near the machine at all times and are always connected to not just diagnose, but to provide real-time alerts and predictions that—if addressed in a timely manner—can prevent costly machine breakdowns and accidents. We place our sensors a few inches or feet from the machine being monitored, with highly directional microphones and noise-reduction algorithms to remove the effect of surrounding noises. What the user receives is the clean, natural sound of the machine, as if they were isolated in a room with only that machine.

Smart Industry: How do you use airborne acoustics?

Yair: The sound a machine makes propagates through all its materials. Contact-based sensors sense the sound on the machine’s surface (i.e. vibrations). But if you heard these vibration acoustics, they would not be similar to the natural sound you would get by standing next to the same machine…and wouldn’t necessarily translate immediately into meaningful insights for an engineer or technician. Until now, very little work has been done on taking the sounds present in the air and analyzing them with advanced algorithms. We’re proud to be pioneering this domain.

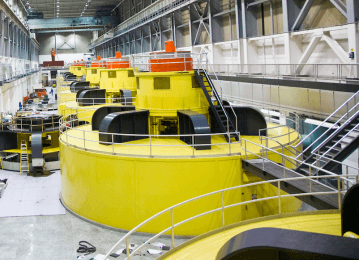

In one case, our sensors were monitoring water turbines in hydroelectric plants [pictured below]. With 3DSignals, our customer can tell whether the turbine is not feeding the system optimally, whether the generator is underperforming in comparison to the input from the turbine, and whether the bearings in the whole system are developing wear-and-tear that endangers the safety of the plant or the lifetime of the system. They can do all of this, as our platform  enables them to listen to processes in chambers both above and below ground, which they can’t regularly monitor with contact-based sensors because of the turbines’ spinning and overall hostile industrial environment.

enables them to listen to processes in chambers both above and below ground, which they can’t regularly monitor with contact-based sensors because of the turbines’ spinning and overall hostile industrial environment.

Smart Industry: Your background is in music. How does that experience translate to the world of machines?

Yair: In my previous start-up company, Tonara, we developed algorithms that analyze the music performed by musicians on their acoustic instruments. Having a lot of experience in acoustic signal-processing in music has contributed greatly to my understanding of the acoustic sounds generated by machines, as many physical qualities of sound are common to both phenomena. However, while music features several key characteristics such as harmonic relations between different components of the acoustic signals, industrial machines, as we all know, play a different, usually less harmonic tune. At 3DSignals, we learned and specialize in listening to this different type of “machine music,” focusing on when they are “playing” out of tune.