Some owners of the Google Home may feel a bit disappointed at its lack of native features, but others such as myself are holding onto hope that third-party developers will be able to plug any holes in its functionality. We’re excited to see the work some developers such as João Dias have put into supporting Google Assistant, but unfortunately the project is left in limbo while Google takes their sweet time inspecting it for approval.

Fortunately, though, Mr. Dias has something else to share that should cause some of you to start salivating. Recently, he has created an easy way to build a webhook to API.AI to handle third-party APIs – dubbed the Voice Assistant Webhook. If you’ll recall, API.AI is the service that powers natural language voice interactions for any third-party services integrating with Google Assistant. This allows developers to respond to user queries in a rich, conversational manner. Thanks to the Voice Assistant Webhook however, any developer can easily start integrating any available API with Google Assistant.

In the video shown above, Mr. Dias asks his Google Home about information related to his Spotify account, YouTube channel, and his Google Fit data. None of the commands he sent to the Google Home are natively supported on the device, but he was able to hook each service’s publicly available APIs to extract the information that he wanted. How this is possible is thanks to the power of one of Mr. Dias’s more popular Tasker plug-ins: AutoVoice.

The AutoVoice application (which requires you to join the beta version here before you can access Google Home related features) allows you to create voice actions to react to complex voice queries through either its Google Now intercepting accessibility service or the Natural Language API (powered by API.AI). But now, Mr. Dias is further extending AutoVoice’s capabilities by allowing you to send any voice data intercepted from Google Now (or captured via any voice dialog prompt from AutoVoice) straight to your backend server running a python script which will ping the third-party API and send the response back to AutoVoice.

Voice Assistant Webhook – in Summary

Let’s break down the general setup process so things make more sense. Setup is fairly simple, provided you are able to follow all of the instructions outlined on the Github page, but do remember this is still beta software and that the plug-in structure is not final.

Let’s break down the general setup process so things make more sense. Setup is fairly simple, provided you are able to follow all of the instructions outlined on the Github page, but do remember this is still beta software and that the plug-in structure is not final.

When you activate Google Now or start an AutoVoice prompt, AutoVoice recognizes your speech and sends them to API.AI for matching. The power of API.AI is that it translates the everyday language of your speech into the precise command with parameters that is required by the web service. The command and any parameters that were setup in API.AI are then sent to the web service and are executed by a python web application. The web application responds to the command with the results of the query which are converted into natural language text through API.AI and sent back to your device. Finally, the output is spoken using AutoVoice on your device.

The process sounds much more complicated than it really is, and although I had a few hiccups getting my own web hook set up, the developer João Dias was very quick to respond to my inquiries. I will try to walk through the steps to set this up yourself at the end of the article for those that want to try.

What does this mean overall though? It means developers have an easy way to integrate Google Now/Assistant with any third-party API that they would like. This was already possible before, but Mr. Dias has made this whole process a lot simpler and easier to develop.

Voice Assistant Webhook – Uses

Basically any existing API can be hooked into this existing framework with minimal coding – an exciting prospect! You could, for example, get your stock updates or the latest sports results, hook into Marvel Comics, get information on Star Wars ships and characters with its API, or hook into one of the online craft beer APIs to get beer recipes! On a more practical note, both Fitbit and Jawbone have existing APIs so you could hook into those and get your fitness data read. The possible uses of this are only limited by your imagination and a sprinkling of work.

After talking to Mr. Dias about the potential of this software, he mentioned that he has already submitted his application plugins to both Amazon and Google which will allow AutoVoice to hook directly into Google Assistant and Alexa. Mr. Dias said he is waiting on both companies to approve his plugins, so unfortunately until that happens you won’t be able to enjoy running your own commands through such convenient mediums. But once the approval is received you can get started on making your own real world ‘Jarvis’ home automation system.

Voice Assistant Webhook – Tutorial

The following is an explanation on how to get the project up and running if you would like to try this out yourself. For this walk-through we will use a basic flow in which we say “Hello I am (your name)” as the command and in turn the response will say “hello” and your return your name.

Setting up Heroku

The first thing you must do is to setup a backend server (a free Heroku account will work, or your own local machine). The fastest way to set this all up is to go to the Github project page and clicking to deploy the project directly to Heroku. Make sure that you install PostgreSQL as well as all other dependencies that are linked in the instructions on Heroku!

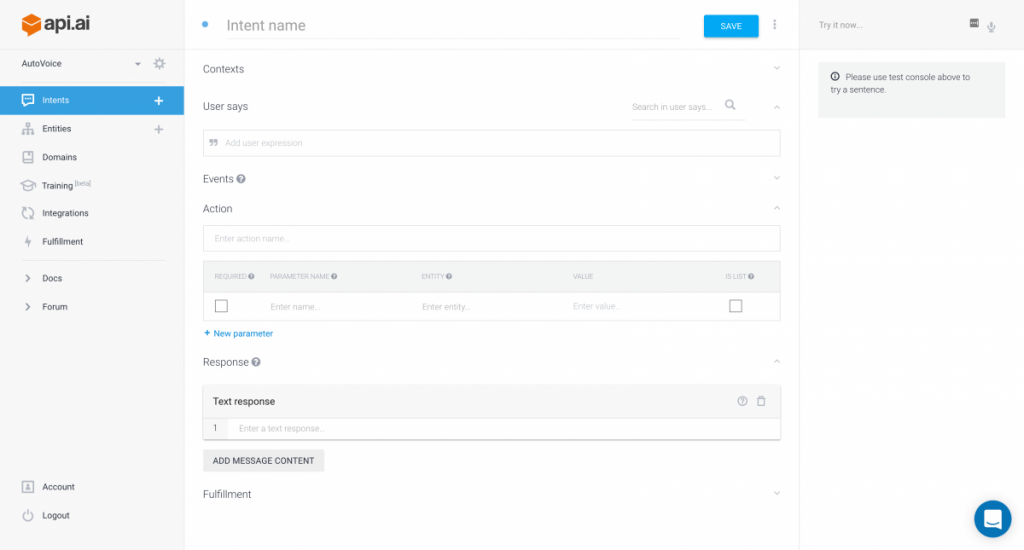

Setting up API.AI

Then, create an account with API.AI. You will need to test that all of the backend python code is functioning properly before we mess with AutoVoice. Go to API.AI and add in your webhook URL. This allows API.AI to communicate with the Heroku app we just deployed. Once your have created your “Agent” as API.AI calls it, go to the settings of the agent and note the Client Access Keys and the Developer Access Keys. Then, go to the intents part and create a new intent called “Hello World“. Under the “User says” section you can type anything but I suggest “Hello World” as this is the command you will speak to your device. Next, under “Action” type EXACTLY helloworld – this is the action that is called on our Heroku application.

Mr. Dias has already created an action for us to use that will respond with “Hello world” and this text must match the Heroku application exactly. Finally at the bottom of the page under the “Fulfillment” heading there is a checkbox called “Use Webhook.” Make sure this is checked as this option tells API.AI to pass the action to your Heroku app and not try to resolve our command itself. Remember to “Save” the new intent using the save button.

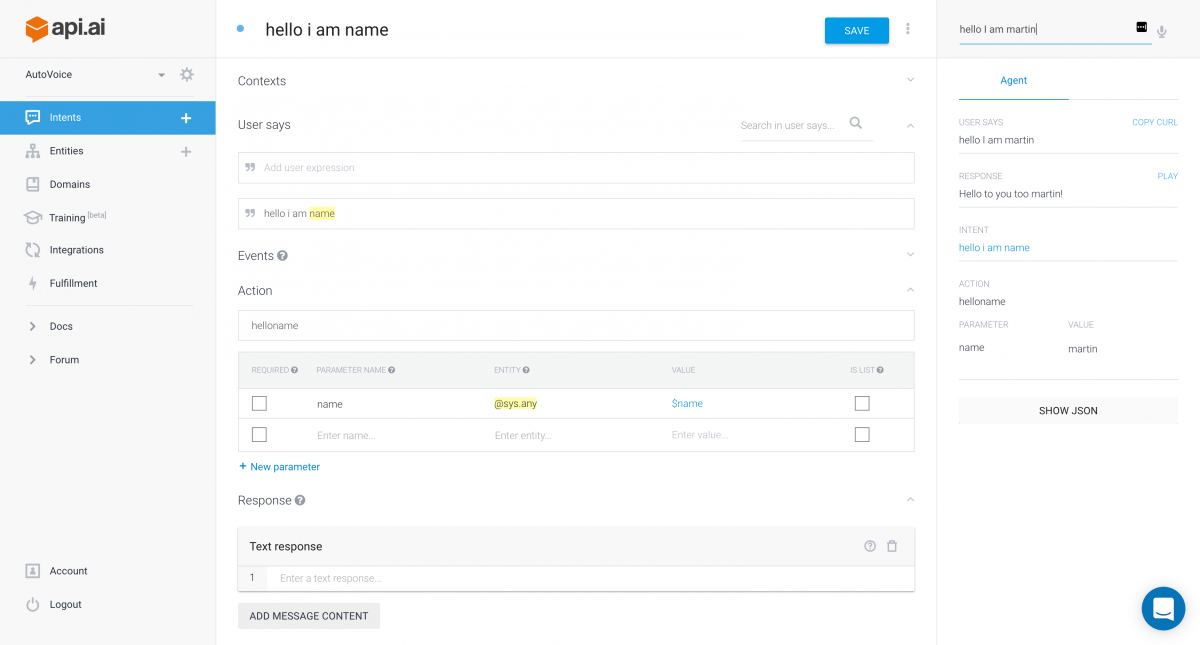

Now we can test this by using the “Try it Now…” panel on the right. You can either click the microphone and say “Hello World” or type hello world in. You should see under the response portion “Hello World!” – this is coming from our Heroku application. I have noticed that free Heroku account put the web service to sleep after 30 minutes of inactivity, so I have sometimes had to send commands twice to get the correct response.

Setting up AutoVoice

On your phone, you will need to install the latest beta version of AutoVoice (and enable its Accessibility Service, if you want commands to be intercepted from Google Now). Open the application and tap on “Natural Language” and then “Setup Natural Language.” This will take you to a screen where you need to enter the Client Access and Developer Access Keys you saved from API.AI. Enter both of those and follow the prompts that are displayed. The application will verify your tokens and then return you to the first screen.

Tap on “Commands” and you will be able to create a new command. Note that AutoVoice will use your access tokens and download any intents that you have already created, so you should see our “Hello World” example we just setup. AutoVoice may also prompt you to import some basic commands if you want; you can play with these just to see how it all works. Moving on, we are going to create a command that will speak our name back to us when we say the phrase “Hello I am xxx” where xxx is your name.

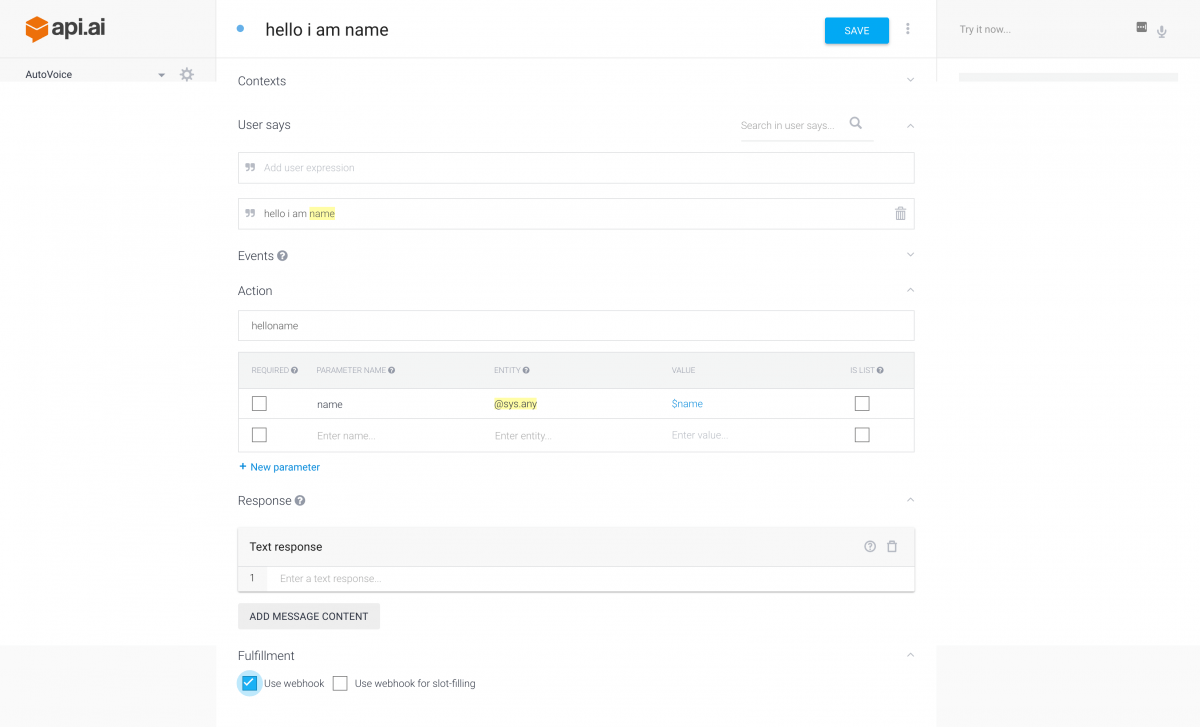

Click on the big “+” in the “Natural Language Intents” screen and the Build AutoVoice Commands screen is displayed. First, type in what command you want to say to execute the backend script we set up. In this case, “Hello I am xxx“. Next, long press on the word “xxx” (your name) and in the popup box you will see an option to “Create Variable.” A Google voice prompt appears where you can speak your variable name, which in this case should be just “name“. You will see a $name is added where the name used to be. There is no need to enter a response here as this part is handled by the Heroku web service. Click “finished” and give your Intent a name. Lastly, an Action prompt is displayed where you must enter the command EXACTLY as it is defined on your web app (helloname).

This matches how we tested API.AI. AutoVoice will update API.AI for you so you do not have to use API.AI for creating any new commands in the future. There is a small problem that I have noticed on the version I tested – the check box we ticked under fulfillment is not checked automatically when we create a command, so we need to go back to API.AI and make sure that the”Use Webhook” checkbox is marked. This will likely be fixed very shortly, though, as Mr. Dias is prompt in responding to feedback.

Now you can try out your new command. Start up the Google Now voice prompt (or create a shortcut to the AutoVoice Natural Language voice prompt) and say “Hello I am xxx” which should shortly return “Hello to you too xxx!”

I know the entire setup is a bit awkward (and likely out of reach for any non-developers), but Mr. Dias states he is working on streamlining this process as much as possible. I personally feel, though, that it’s a great start and quite polished for beta software. As I noted earlier, Mr. Dias is awaiting both Google and Amazon to approve his plugin so that this will work seamlessly with Google Assistant and Amazon Alexa as well. Soon, you will be able to connect these two Home Assistant interfaces with any publicly available third-party API on the Internet!

Many thanks to João Dias for helping us throughout the article!