Designing great user interfaces in the internet of things relies on three core principles: simplicity, interoperability and value appreciation. The Amazon Echo embodies all three.

The Amazon Echo has sold more than 4 million units since launching in November 2014, according to the Consumer Intelligence Research Partners. One of the early employees said Echo hit a million pre-orders in less than two weeks. Another study by Slice Intelligence found Echo’s sales grew by an average of 342% between Q3 and Q4 of 2015. These figures demand attention, especially when juxtaposed against a backdrop of a somewhat sluggishly growing smart home sector in which a mere 10% of Americans have purchased connected in-home devices.

There are lots of reasons Amazon’s Echo product has burst onto the smart home scene — and arguably into the mainstream — so rapidly in the last year. First, it’s a natural extension of Amazon’s strategy to disrupt brick-and-mortar retailers — providing more personalized convenience through intelligent software applications. Second, the retail giant has deep pockets, which enables lots of trial, error, failures and talent acquisition.

But let’s look deeper than Amazon itself. The real reasons for the success — or at least notable promise of the Amazon Echo — lie in its realization of three of the most important elements of effective IoT UX design.

IoT UX design lesson #1: It’s easy to use … really easy

Many product companies make the grave mistake of over-complicating connected versions of their products — too many buttons on a screen, too many levels to tap through to accomplish a task, too many features in an app, too many sensors (and not enough value) to justify a low battery life.

The most essential step in effective connected-product development is to build a simple IoT UX and UI.

The retail giant’s voice-interactive speaker looks a lot like your average portable music player –cylindrical and slim with a light and a button or two. But where the Echo prevails is in its simplicity to use. Although it’s not the first product to ship with voice recognition and interaction, the Amazon Echo is a device users talk to, (mostly) without having to repeat themselves. Sure the device has an app, but the fact that tapping, pinching and swiping is the secondary mode of interaction separates the Amazon Echo. It is simply easier to use compared to any other smart home device. Voice interaction also improves accessibility — for the elderly, for folks with special needs, for kids, for anyone who prefers or needs hands free and heads up.

Using the device is simple, screenless, and, by most metrics, an improvement to our current heads-down, both-hands-involved mobile app culture. Despite the simplicity of interacting with the Echo, under the hood lies a complex and growing universe of software and hardware intelligence. Through artificial intelligence and an Alexa-specific speech recognition system with multi-domain systems to disambiguate what users want with the least amount of friction, the software and architecture underlying the Echo is driving the IoT user experience far more than the cylindrical hardware speaker itself.

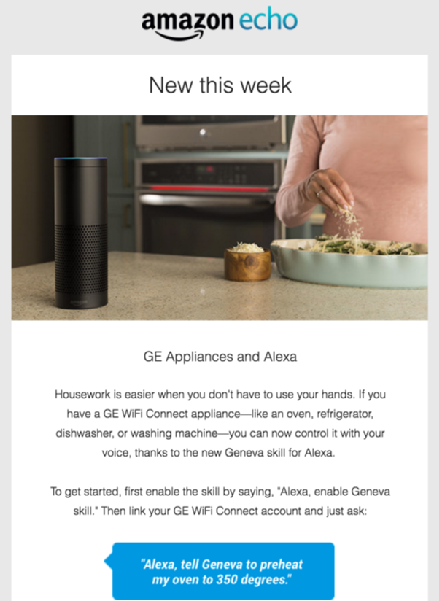

Example of a weekly email promoting new features and how users can immediately participate

Example of a weekly email promoting new features and how users can immediately participate

What simple looks like for the Amazon Echo:

- Voice interaction primary; mobile app secondary

- Simple setup

- Minimal aesthetic design

- Weekly emails communicating new features and how to sample them

- Offers frequent suggestions for exploring device capabilities

- Machine learning enabling speech recognition optimization and personalization over time

- Interoperability across devices

IoT UX design lesson #2: It’s interoperable and ecosystem-driven

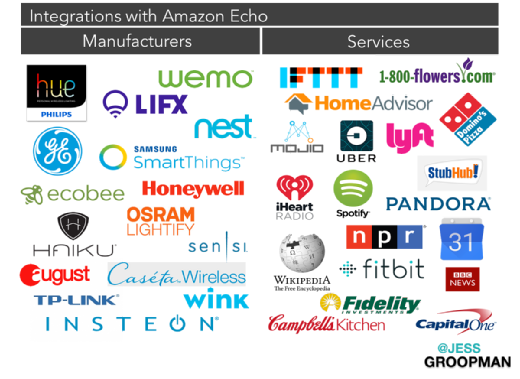

The Amazon Echo also delivers on the most important requirement for the success of any connected object: the ability to interact and be interacted with — other objects, institutions, users, protocol, functions and so on. The device integrates with a wide variety of smart home appliances, such as thermostats, fans and lightbulbs, as well as other smart home automation hubs like SmartThings and Insteon. (You can find Amazon’s living list of integrations here).

Amazon’s integration ecosystem extends to other companies, services and platforms. For example, users can directly order a pizza from Dominos, call for a ride with Lyft or Uber, voice-order flowers through the 1-800-Flowers app, turn down the heat on their Honeywell thermostat, turn on the lights with their Philips Hue bulbs. The IoT UX ranges from informational integrations with Wikipedia and Bing to personalized news briefings from NPR or the BBC.

The Echo becomes more interesting to Amazon’s partners through customer service and support use cases. Amazon’s partnership with IoT platform Zonoff and HomeAdvisor, an online marketplace for local service technicians, plumbers, electricians, etc., allows users to use Echo to solve problems far beyond purchasing products and services off Amazon or listening to music. Ultimately, the value of integrations compounds when users can do more than purchase; it becomes transformative when they can solve problems and accomplish tasks. It also aligns Amazon more centrally to its customers.

Of course, interoperability and integrations aren’t solely about sensors and communications protocols, nor does the value they enable end with corporate partnerships. Perhaps the most unique manifestation of the Echo’s openness is that Amazon has opened two distinct types of development kits to the community: the Alexa Voice Service and the Alexa Skills Kit.

Alexa Voice Service allows hardware manufacturers and developers to integrate the Echo into their devices and/or applications with a few lines of code, such that Echo users can interact with other devices immediately. The Alexa Skills Kit offers developers a variety of APIs that enable them to build or add new features, functions and applications for Alexa.

According to Amazon, more than 10,000 developers have registered to integrate Alexa into their products. By opening up the device to the entire ecosystem, Alexa has already gained more than 1,600 skills (up from 130 in January 2016), and alerts users of new skills each week. The Echo’s open and expanding ecosystem and interoperability doesn’t just create a better out-of-the-box experience, it also extends the range of potential use cases, users and value over time.

What interoperable looks like for the Amazon Echo:

- Interoperability across Amazon devices

- Interoperability across dozens of other connected devices

- Interoperability across dozens of other service providers through APIs

- Open SDKs offer integration capabilities for anyone (enterprise, startup, DIY)

- Machine-learning capabilities allow constituencies to observe user adoption and preferences

IoT UX design lesson #3: Value appreciates (not depreciates) over time

For many products, the purchase date marks the date the product begins to depreciate in value. In an analog world, this reality is what supports a product-centric business model. But the sensors, software and data shift this paradigm. Successful business models in a connected world are no longer based solely on product sales, volume and margin; the true value of connected products lies in the data-driven, service-centric business models products and integrations enable. This is inherent to the physical and digital design of the Amazon Echo; its business model is woven into its very design. Although the Amazon Echo is indeed a physical product with tangible surfaces and just under a dozen embedded sensors, the product functions as a platform first and foremost.

In an interview with Fortune, Dave Limp, SVP of Amazon devices, explained this device strategy expressly, stating, “What we’re trying to do is build a business model where we sell our products — the hardware side of the products — effectively at cost. And we think that aligns ourselves very well with customers so that if they take a product [like] the Echo and they just brought it home, didn’t like it and put it in a drawer, we shouldn’t profit from that as far as we’re concerned. We really believe — and the team believes — that we should align ourselves with both the business model and the product, so that if customers use it over a period of time, then we’ll take a small amount of profit every time they have a transaction. It might be an Audible book, it might be a Kindle book, it might be shopping as they go through the lifecycle of that product.”

This is, of course, in addition to the massive amounts of data the company is collecting about user interactions, user preferences and variations across demographics, households, integration preferences, developer trends and so on. Amazon has designed the device to learn from every interaction and uses those learnings to constantly evolve the IoT UX by improving intelligence, voice recognition, speed, service, new skills, partner offerings and overall customer experience.

From a design standpoint, the front end is designed to drive seamless interactions and the back end is designed to constantly improve interact ability over time. The gadget itself merely drives value across the broader ecosystem of which it is a part.

What value appreciation looks like for the Amazon Echo:

- Device learns user behaviors over time (individual and across broader user base)

- Users enjoy more features over time

- Sensors and software collect information designed to inform improvements and decision-making

- Interoperability allows device to support broadening range of use cases

- Supports new revenue for Amazon through enabling new partnerships

- Supports new (in-home) channel for partners’ customer programs